ChatGPT adds a new dedicated Health mode to connect medical records and wellness apps

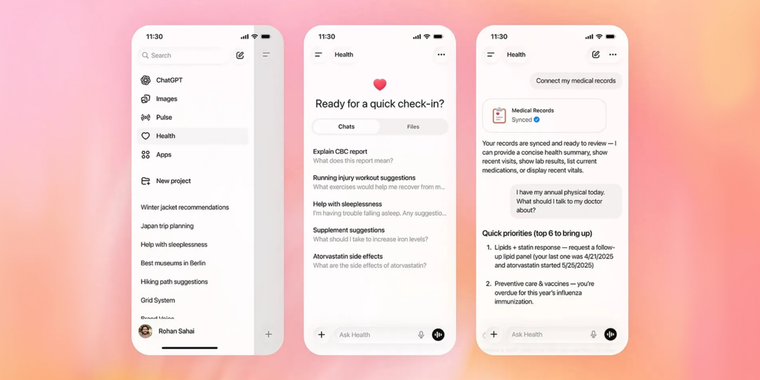

OpenAI has introduced ChatGPT Health, a new feature inside ChatGPT that lets users connect medical records and third-party wellness apps. It is designed to help with everyday health and wellness questions by grounding responses in a user’s own data, but OpenAI says it is not intended for diagnosis or treatment and should not replace medical care.

ChatGPT Health lives in a dedicated tab where chats, files, connected apps, and memory are kept separate from the rest of ChatGPT. OpenAI says information from this space does not carry over to other chats, and conversations in ChatGPT Health are not used to train its foundation models.

Users can connect services like Apple Health for activity and sleep patterns, MyFitnessPal and Weight Watchers for nutrition, and Function for lab testing insights. Medical record connectivity is supported via a partnership with b.well (which Google also partnered with last year), enabling analysis of lab results and clinical history when users opt in. The feature is launching to a small early access group, with broader availability planned in the coming weeks.

Comments

Next up will be "Hackers find way to get ChatGPT to expose people's health data"

...because trusting LLM (a.k.a. hallucination machine) with health stuff is a great idea. it's time to eat more glue.

"Comrade, your resting heart rate spiked during yesterday's broadcast. Remember: excessive doubt is unhealthy. Doubleplusgood suggestion: increase prolefeed consumption and report for telescreen recalibration."

Great, more spying! Let's face it, unregulated business and a paranoid Government are things to like.

Sounds like a security and privacy nightmare especially if this is not covered under legal protections of medical records. Does it?

Well OpenAI has confirmed that ChatGPT Health is not HIPAA compliant (the Health Insurance Portability and Accountability Act), since that generally applies to clinical or professional healthcare settings and not consumer apps. They just say all messages have built in encryption (not end-to-end) and are excluded from model training by default 🤷♂️

Sounds terrible to me honestly, will highly unrecommend it to my close relative beings.

Let's be honest: who wanted to outsource your health to a proprietarist anyway, especially after the half-year ago's 23andMe fiasco. Let's just not trust the "not used to train its foundation models" claim if you are denied access to source code, especially since Windows TCO kills patients, so use GNU Health instead.

GNU Health instead.