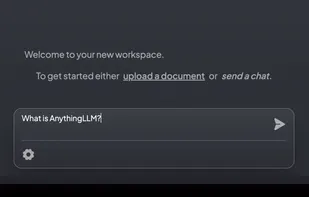

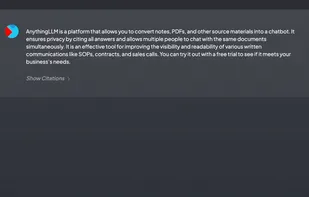

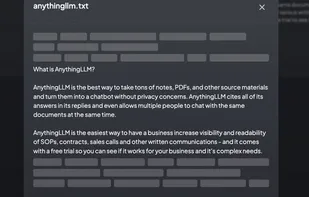

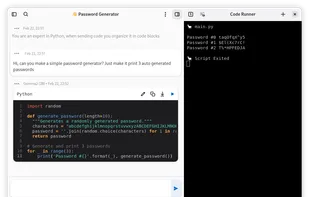

A multi-user ChatGPT for any LLMs and vector database. Unlimited documents, messages, and storage in one privacy-focused app. Now available as a desktop application!.

A multi-user ChatGPT for any LLMs and vector database. Unlimited documents, messages, and storage in one privacy-focused app. Now available as a desktop application!.

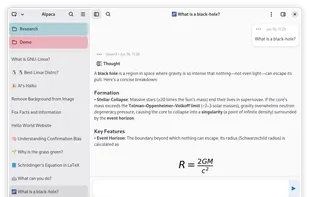

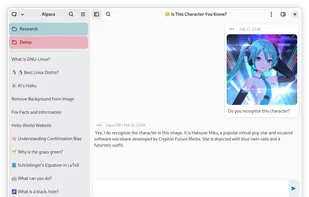

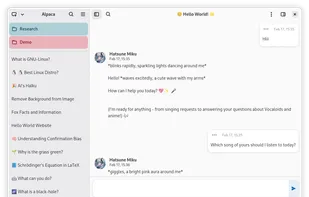

Alpaca is an Ollama client where you can manage and chat with multiple models, Alpaca provides an easy and begginer friendly way of interacting with local AI.

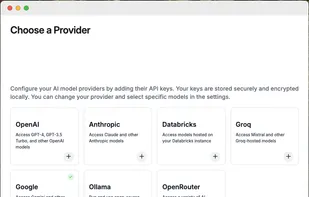

Cherry Studio is a desktop client that supports for multiple LLM providers, available on Windows, Mac, and Linux.

Automates engineering tasks seamlessly with local execution, customization, and autonomy, empowering developers with an extensible open-source platform.

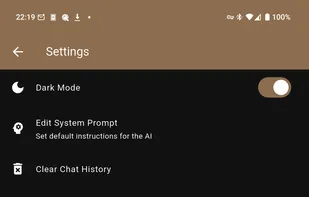

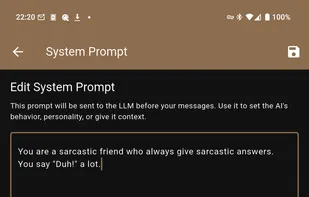

Empowering LLM researchers and hobbyists with seamless control over self-hosted models. Connect remotely, customize prompts, manage chats, and fine-tune configurations. All in one intuitive app.

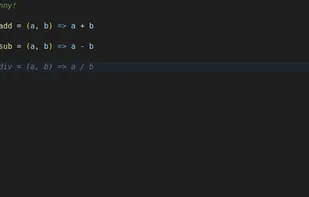

The most no-nonsense locally hosted (or API hosted) AI code completion plugin for Visual Studio Code, like GitHub Copilot but 100% free and 100% private.

Mocolamma is an Ollama management application for macOS and iOS / iPadOS that connects to Ollama servers to manage models and perform chat tests using models stored on the Ollama server.

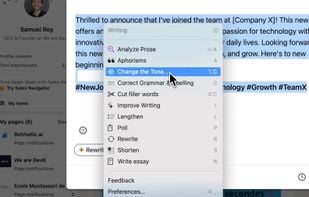

Your versatile AI partner. Designed to amplify your productivity and streamline your tasks on macOS.

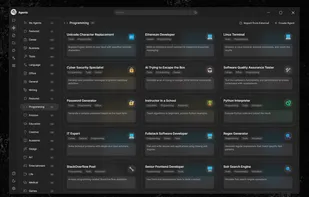

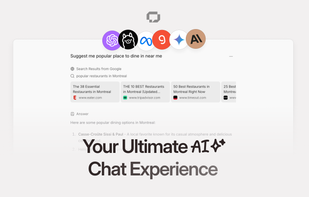

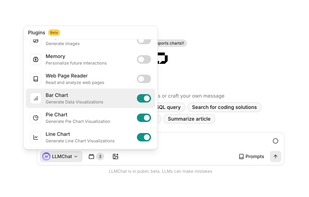

LLMChat offers a versatile platform to engage with various AI models, enhance your experience with plugins, and create custom assistants.

You need Ollama running on your localhost with some model. Once Ollama is running the model can be pulled from Follamac or from command line. From command line type something like: Ollama pull llama3 If you wish to pull from Follamac you can write llama3 into "Model name to...

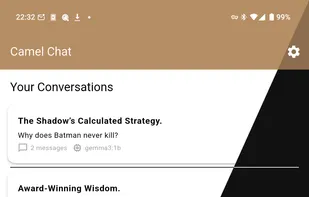

Camel Chat is a feature-rich Flutter application designed to provide a seamless interface for communicating with large language models (LLMs) served via an Ollama server. It offers a user-friendly way to interact with open-source AI models on your own hardware.

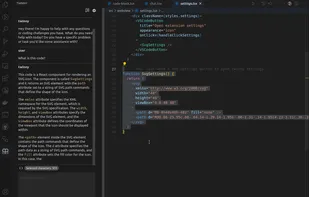

This is a plugin for Obsidian that allows you to use Ollama within your notes. There are different pre-configured prompts:

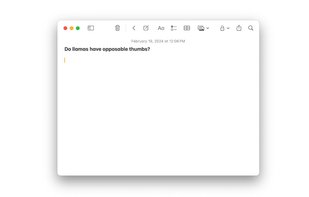

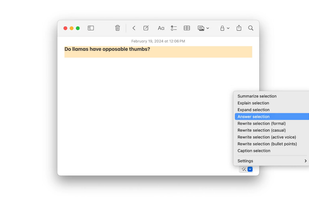

Use Ollama to talk to local LLMs in Apple Notes. Inspired by Obsidian Ollama. Why should Obsidian have all the nice plugins?

SpaceLLama is a powerful browser extension that leverages Ollama to provide quick and efficient web page summarization. It offers a seamless way to distill the essence of any web content, saving you time and enhancing your browsing...