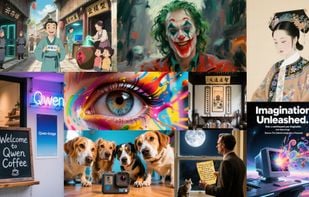

Qwen Image is a powerful image generation foundation model capable of complex text rendering and precise image editing.

- AI Image Generator

- Free • Open Source

- Online

- Self-Hosted

- Android

- Android Tablet

- iPhone

- iPad

- Mac

- Windows

Qwen Image is a powerful image generation foundation model capable of complex text rendering and precise image editing.

Hugging Face is an online community dedicated to advancing AI and democratizing good machine learning. Hugging Face empowers the field of AI through various open-source developments, free and low-cost hosting of machine learning resources and by providing an accessible and...

Flux.2 is a state-of-the-art text-to-image synthesis model developed by Black Forest Labs. It uses advanced AI technology to generate high-quality images from textual descriptions, pushing the boundaries of creativity, efficiency, and diversity in image generation.

AI model transforming text prompts into images using DALL·E mini technology, while acknowledging potential bias due to unfiltered data sources.

An open-source AI platform creating images from text using latent diffusion models, supporting inpainting, outpainting, and more, launched in 2022.

AUTOMATIC1111's Stable Diffusion web UI provides a powerful, web interface for Stable Diffusion featuring a one-click installer, advanced inpainting, outpainting and upscaling capabilities, built-in color sketching and much more.

Streamlined interface for generating images with AI in Krita. Inpaint and outpaint with optional text prompt, no tweaking required.

Civitai is the only Model-sharing hub for the AI art generation community! Free to use, open source, and continually improving.

An open source AI image generator that can create beautiful images from specific styles (https://alternativeto.net/software/flux-lora-ai-image-generator/about/).

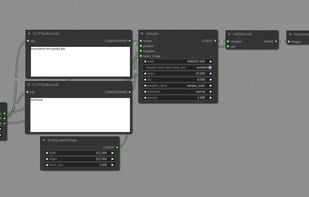

ComfyUI provides a powerful, modular workflow for AI art generation using Stable Diffusion. The UI will let you design and execute advanced stable diffusion pipelines using a graph/nodes/flowchart based interface.

InvokeAI is an implementation of Stable Diffusion, the open source text-to-image and image-to-image generator. It provides a streamlined process with various new features and options to aid the image generation process.

Stable Diffusion AI (SDAI) is an easy-to-use app that lets you quickly generate images from text or other images with just a few clicks. With Stable Diffusion AI, you can communicate with your own server and generate high-quality images in seconds.

Artbreeder aims to be a new type of creative tool that empowers users creativity by making it easier to collaborate and explore. Originally Ganbreeder, it started as an experiment in using breeding and collaboration as methods of exploring high complexity spaces.

DiffusionBee is the easiest way to run Stable Diffusion locally on your M1 Mac. Comes with a one-click installer. No dependencies or technical knowledge needed.

3D to Photo is an open-source package by Dabble, that combines Three.js and Stable Diffusion to build a virtual photo studio for product photography.

Opendream brings much needed and familiar features, such as layering, non-destructive editing, portability, and easy-to-write extensions, to your Stable Diffusion workflows.

AI imagined images. Pythonic generation of stable diffusion images.

A GUI for generating multimodal art (text-to-image) with multiple models (including Disco Diffusion v5, Hypertron v2 or VQGAN+CLIP).

Easy Diffusion (Stable Diffusion UI) is an easy to install distribution of Stable Diffusion, the leading open source text-to-image AI software. Easy Diffusion installs all required software components required to run Stable Diffusion plus its own user friendly and powerful web...

Openjourney is a fine-tuned Stable Diffusion model that is trained on images created with Midjourney. It tries to achieve the style of Midjourney images. It is created by PromptHero. Latest iteration of it is trained on 100K+ Midjourney v4 images.

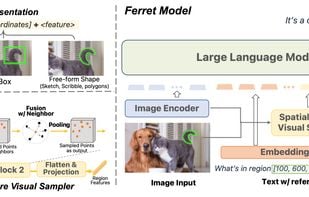

An end-to-end MLLM that accept any-form referring and ground anything in response.

Text to image generation with VQGAN and CLIP (z+quantize method with augmentations)

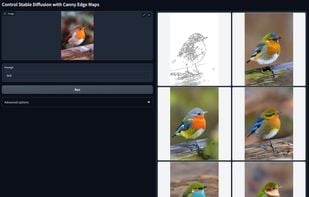

ControlNet is a neural network structure to control diffusion models by adding extra conditions.

Based on a short text description, ruDALL-E generates bright and colourful images on a variety of topics and subjects. The model understands a wide range of concepts and generates completely new images and objects that did not exist in the real world.