This Chrome extension is powered by Ollama. Inference is done on your local machine without any external server support. However, due to security constraints in the Chrome extension platform, the app does rely on local server support to run the LLM.

Whismer AI is described as 'Whismer is a customized AI question-and-answer tool that allows users to train their own AI chatbot with their own resources, making it capable of solving any problem in their specific field' and is an website in the ai tools & services category. There are more than 50 alternatives to Whismer AI, not only websites but also apps for a variety of platforms, including Android, iPhone, Mac and Windows apps. The best Whismer AI alternative is DeepSeek, which is both free and Open Source. Other great sites and apps similar to Whismer AI are Mistral Le Chat, Google Gemini, GPT4ALL and Jan.ai.

This Chrome extension is powered by Ollama. Inference is done on your local machine without any external server support. However, due to security constraints in the Chrome extension platform, the app does rely on local server support to run the LLM.

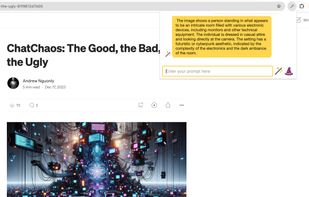

Summarize lengthy documents and videos effortlessly with Linfo.ai. Get structured insights, mind maps, and interactive Q&A.

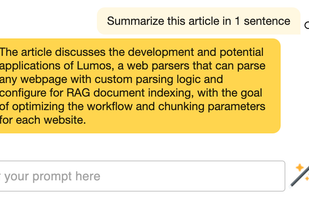

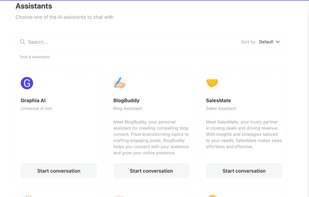

ConsoleX.ai is the ultimate AI playground with infinite possibilities. It offers a streamlined interface to chat with any model and comprehensive features designed to fuel your innovation. Whether you're looking for an alternative AI assistant to ChatGPT, Claude, or Gemini...

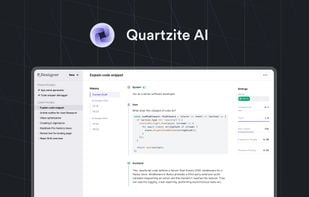

Quartzite is an AI productivity app / Prompt IDE that allows you to interact with Large Language Models (LLMs) such as Open AI’s GPT-4, or Google’s Gemini.

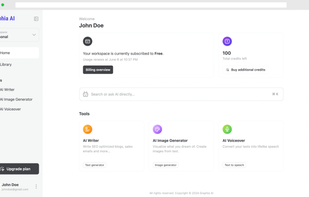

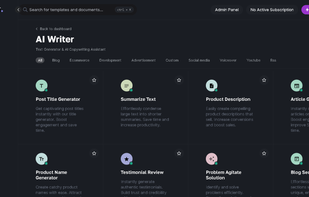

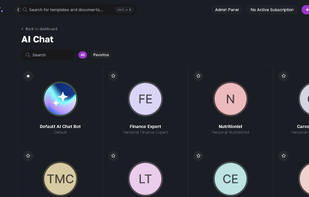

All-in-one platform to generate AI content and start making money in minutes, Unlock your business potential by letting the AI work and generate money for you.

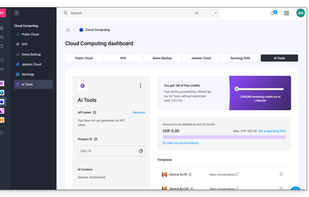

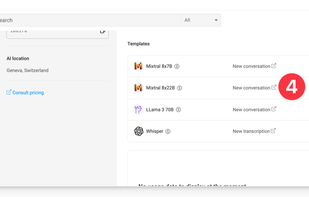

For the home or business, Infomaniak offers artificial intelligence services that are as powerful and more competitive as ChatGPT and that guarantee data protection thanks to end-to-end processing in Switzerland.