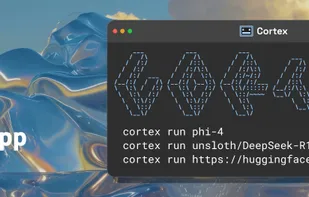

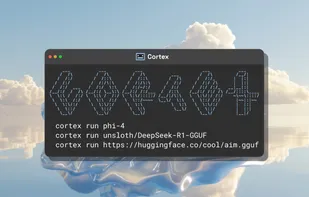

Osaurus is described as 'Native, Apple Silicon–only local LLM server. Similar to Ollama, but built on Apple's MLX for maximum performance on M-series chips. SwiftUI app + SwiftNIO server with OpenAI-compatible endpoints' and is a large language model (llm) tool in the ai tools & services category. There are more than 25 alternatives to Osaurus for a variety of platforms, including Windows, Mac, Linux, Flathub and Flatpak apps. The best Osaurus alternative is Ollama, which is both free and Open Source. Other great apps like Osaurus are GPT4ALL, Jan.ai, Open WebUI and AnythingLLM.