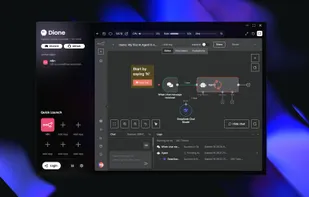

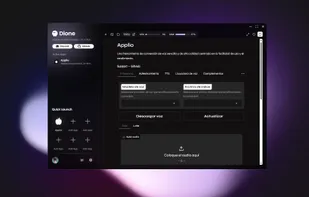

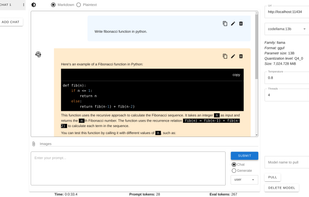

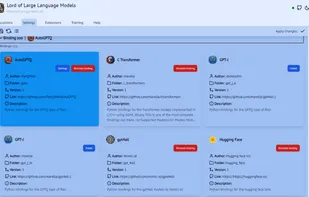

Dione makes installing complex applications as simple as clicking a button — no terminal or technical knowledge needed. For developers, Dione offers a zero-friction way to distribute apps using just a JSON file. App installation has never been this effortless.

Cost / License

- Free

- Open Source (MIT)

Platforms

- Mac

- Windows

- Linux