LLM Hub is an open-source Android app for on-device LLM chat and image generation. It's optimized for mobile usage (CPU/GPU/NPU acceleration) and supports multiple model formats so you can run powerful models locally and privately.

ChatterUI is described as 'Run LLMs on device or connect to various commercial or open source APIs. ChatterUI aims to provide a mobile-friendly interface with fine-grained control over chat structuring' and is a large language model (llm) tool in the ai tools & services category. There are more than 10 alternatives to ChatterUI for a variety of platforms, including Mac, iPad, iPhone, Android and Windows apps. The best ChatterUI alternative is Lumo by Proton, which is both free and Open Source. Other great apps like ChatterUI are AnythingLLM, PocketPal, Google AI Edge Gallery and Chatbox AI.

LLM Hub is an open-source Android app for on-device LLM chat and image generation. It's optimized for mobile usage (CPU/GPU/NPU acceleration) and supports multiple model formats so you can run powerful models locally and privately.

Run LLMs on AMD Ryzen™ AI NPUs in minutes. Just like Ollama - but purpose-built and deeply optimized for the AMD NPUs.

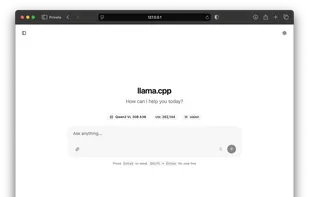

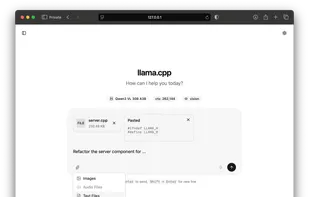

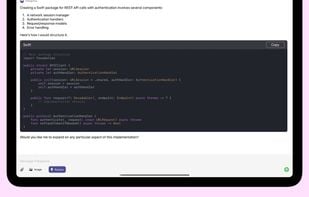

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud.

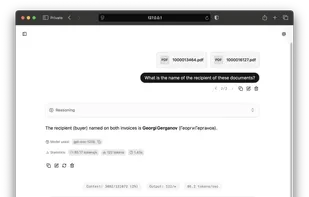

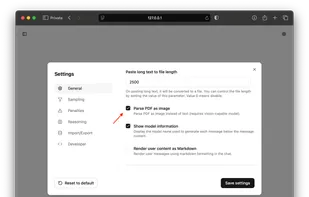

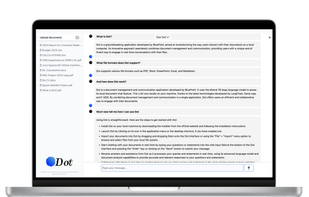

A standalone open source app meant for easy use of local LLMs and RAG in particular to interact with documents and files similarly to Nvidia's Chat with RTX.

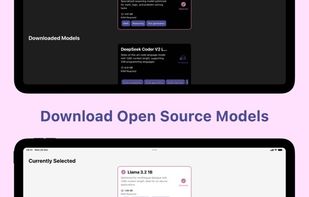

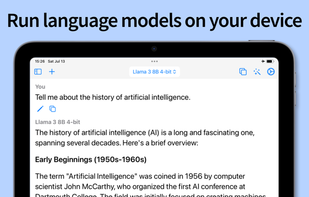

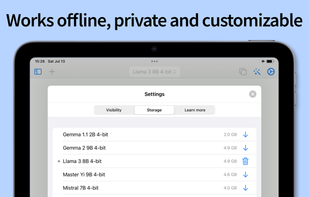

Local Chat allows you to run the latest open language models on your Mac, iPad, and iPhone. Once you've downloaded the models, you can use the chat function even when your device is offline. Your chats remain totally private, since no internet connection is required to...

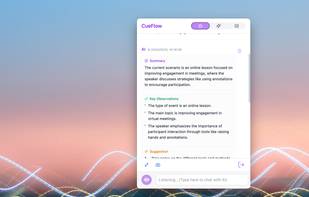

Empowering your conversations with real-time AI insights. CueFlow transcribes locally, analyzes dialogue, understands context, and provides timely assistance, helping you navigate various scenarios with confidence. And no any data privacy concerns.