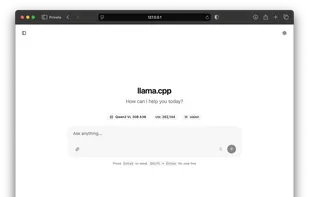

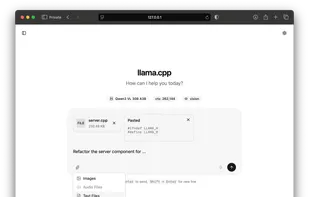

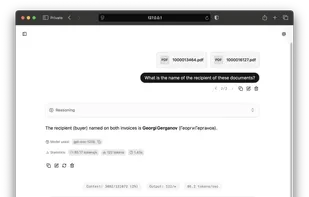

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud.

Cost / License

- Free

- Open Source (MIT)

Application types

Platforms

- Windows

- Mac

- Linux

- Docker

- Homebrew

- Nix Package Manager

- MacPorts

- Self-Hosted

+9