Run LLMs on AMD Ryzen™ AI NPUs in minutes. Just like Ollama - but purpose-built and deeply optimized for the AMD NPUs.

Cost / License

- Free Personal

- Open Source

Application types

Platforms

- Windows

- Online

- Self-Hosted

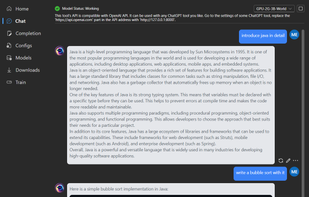

AI00 RWKV Server is described as 'Inference API server for the RWKV language model based upon the web-rwkv inference engine' and is a large language model (llm) tool in the ai tools & services category. There are more than 10 alternatives to AI00 RWKV Server for a variety of platforms, including Windows, Linux, Mac, Self-Hosted and Python apps. The best AI00 RWKV Server alternative is Ollama, which is both free and Open Source. Other great apps like AI00 RWKV Server are GPT4ALL, Jan.ai, LM Studio and LocalAI .

Run LLMs on AMD Ryzen™ AI NPUs in minutes. Just like Ollama - but purpose-built and deeply optimized for the AMD NPUs.

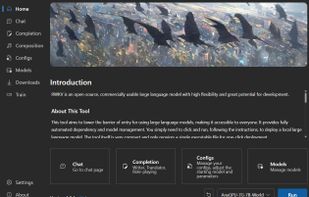

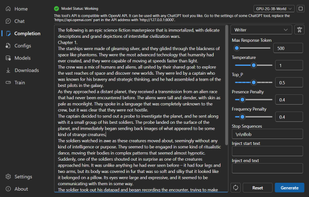

This project aims to eliminate the barriers of using large language models by automating everything for you. All you need is a lightweight executable program of just a few megabytes. Additionally, this project provides an interface compatible with the OpenAI API, which means...

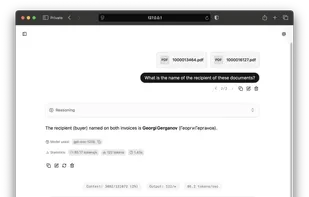

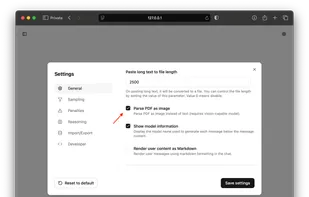

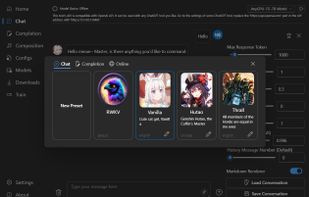

Multimodal AI Assistant: · Interact through Text, Image, and Audio with model capabilities

Privacy-Focused: · Runs entirely on-device, no data sent to external servers · Ensures complete user information security and confidential conversations

Extensive Model Sup.

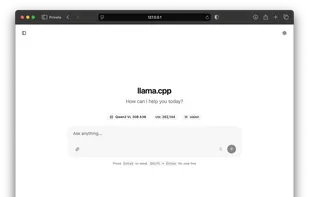

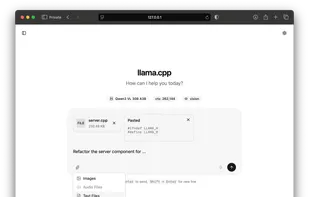

The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide range of hardware - locally and in the cloud.