Run:ai

Transform your AI infrastructure with Run:ai to accelerate development, optimize resources, and lead the race in AI innovation.

Cost / License

- Subscription

- Proprietary

Platforms

- Online

- Software as a Service (SaaS)

Features

- Orchestration

- Kubernetes

- AI-Powered

- Container Orchestration

- Resource management

Run:ai News & Activities

Recent News

- POX published news article about Run:ai

NVIDIA acquires Kubernetes-based workload management software provider Run:ai for $700M

NVIDIA acquires Kubernetes-based workload management software provider Run:ai for $700MNVIDIA has announced it has officially entered a definitive agreement to acquire Run:ai, a software...

Recent activities

kdi23 added Run:ai as alternative to VideoGen API

kdi23 added Run:ai as alternative to VideoGen API taam-cloud added Run:ai as alternative to Taam Cloud

taam-cloud added Run:ai as alternative to Taam Cloud POX added Run:ai as alternative to Hugging Face Generative AI Services and NetMind

POX added Run:ai as alternative to Hugging Face Generative AI Services and NetMind POX added Run:ai as alternative to NVIDIA NIM and AWS Neuron

POX added Run:ai as alternative to NVIDIA NIM and AWS Neuron

Run:ai information

What is Run:ai?

Transform your AI infrastructure with Run:ai to accelerate development, optimize resources, and lead the race in AI innovation.

Built for AI. Specialized for GPUs. Designed with an eye on the future, Run:ai’s platform ensures your AI initiatives can always take advantage of cutting edge breakthroughs.

Run:ai Cluster Engine We’ve built the Cluster Engine so you could speedup your AI initiatives and lead the race.

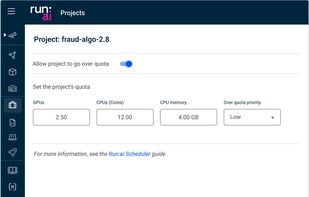

- AI Workload Scheduler: Optimize resource management with a Workload Scheduler tailored for the entire AI life cycle

- GPU Fractioning: Increase cost efficiency of Notebook Farms and Inference environments with Fractional GPUs

- Node Pooling: Control heterogeneous AI Clusters with quotas, priorities and policies at the Node Pool level

- Container Orchestration: Orchestrate distributed containerized workloads on Cloud-Native AI Clusters

Meet your new AI cluster; Utilized. Scalable. Under control.

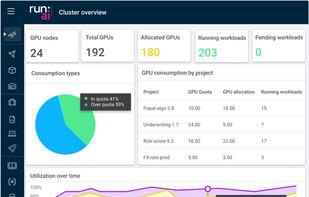

- 10x More Workloads on the Same Infrastructure: With Run:ai’s dynamic scheduling, GPU pooling, GPU fractioning, and more

- Fair-Share Scheduling, Quota Management, Priorities, and Policies: With Run:ai Workload Scheduler, Node Pools, policy enforcement, and more

- Insights Into Infrastructure and Workload Utilization Across Clouds and On-Premise: With overview dashboards, historical analytics, and consumption reports

Your new AI dev platform; Simple. Scalable. Open.

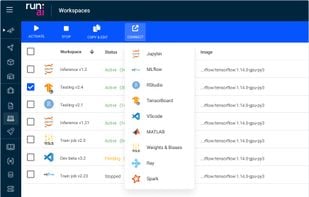

- Notebooks on Demand: Launch customized workspaces with your favorite tools and frameworks

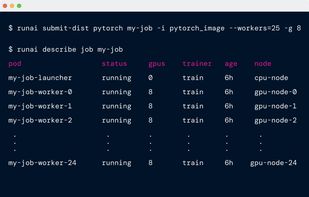

- Training & Fine-tuning: Queue batch jobs and run distributed training with a single command line

- Private LLMs: Deploy and manage your inference models from one place