NVIDIA NIM

NVIDIA NIM is a set of accelerated inference microservices that allow organizations to run AI models on NVIDIA GPUs anywhere.

Cost / License

- Freemium

- Proprietary

Platforms

- Self-Hosted

- Docker

- Kubernetes

- Online

Features

NVIDIA NIM News & Activities

Recent News

- Maoholguin published news article about NVIDIA NIM

NVIDIA debuts Nemotron 3 open models for scalable agent systems with up to 500B parameters

NVIDIA debuts Nemotron 3 open models for scalable agent systems with up to 500B parametersNVIDIA has introduced the Nemotron 3 family of open models, along with datasets and libraries aimed...

Recent activities

Danilo_Venom added NVIDIA NIM as alternative to Cloudflare Workers AI

Danilo_Venom added NVIDIA NIM as alternative to Cloudflare Workers AI POX added NVIDIA NIM as alternative to Hugging Face Generative AI Services and NetMind

POX added NVIDIA NIM as alternative to Hugging Face Generative AI Services and NetMind POX added NVIDIA NIM as alternative to Amazon Bedrock, Run:ai, Amazon SageMaker and Valohai

POX added NVIDIA NIM as alternative to Amazon Bedrock, Run:ai, Amazon SageMaker and Valohai- POX added NVIDIA NIM

NVIDIA NIM information

What is NVIDIA NIM?

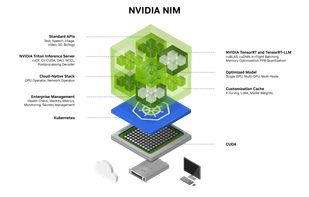

NVIDIA NIM provides containers to self-host GPU-accelerated inferencing microservices for pretrained and customized AI models across clouds, data centers, RTX AI PCs and workstations. NIM microservices expose industry-standard APIs for simple integration into AI applications, development frameworks, and workflows. Built on pre-optimized inference engines from NVIDIA and the community, including NVIDIA TensorRT and TensorRT-LLM, NIM microservices optimize response latency and throughput for each combination of foundation model and GPU.

How it works?

NVIDIA NIM simplifies the journey from experimentation to deploying AI applications by providing enthusiasts, developers, and AI builders with pre-optimized models and industry-standard APIs for building powerful AI agents, co-pilots, chatbots, and assistants. Built on robust foundations, including inference engines like TensorRT, TensorRT-LLM, and PyTorch, NIM is engineered to facilitate seamless AI inferencing for the latest AI foundation models on NVIDIA GPUs from cloud or datacenter to PC.