NexaSDK

Run frontier LLMs and VLMs with day-0 model support across GPU, NPU, and CPU, with comprehensive runtime coverage for PC (Python/C++), mobile (Android & iOS), and Linux/IoT (Arm64 & x86 Docker). Supporting OpenAI GPT-OSS, IBM Granite-4, Qwen-3-VL, Gemma-3n, Ministral-3, and more.

Cost / License

- Free

- Open Source (Apache-2.0)

Platforms

- Windows

- Android

- iPhone

- Mac

- Linux

Features

NexaSDK News & Activities

Recent activities

bugmenot added NexaSDK as alternative to AI Playground

bugmenot added NexaSDK as alternative to AI Playground bugmenot added NexaSDK as alternative to FastFlowLM

bugmenot added NexaSDK as alternative to FastFlowLM bugmenot added NexaSDK as alternative to AI00 RWKV Server

bugmenot added NexaSDK as alternative to AI00 RWKV Server bugmenot added NexaSDK as alternative to AI Dev Gallery and LocalAI

bugmenot added NexaSDK as alternative to AI Dev Gallery and LocalAI- bugmenot added NexaSDK as alternative to Lemonade Server, llama.cpp and vllm-playground

bugmenot added NexaSDK as alternative to RWKV Runner and RWKV Chat

bugmenot added NexaSDK as alternative to RWKV Runner and RWKV Chat bugmenot added NexaSDK as alternative to Nexa Studio

bugmenot added NexaSDK as alternative to Nexa Studio

NexaSDK information

What is NexaSDK?

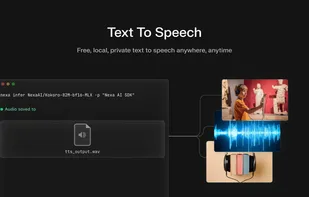

NexaSDK is an easy-to-use developer toolkit for running any AI model locally — across NPUs, GPUs, and CPUs — powered by our NexaML engine, built entirely from scratch for peak performance on every hardware stack. Unlike wrappers that depend on existing runtimes, NexaML is a unified inference engine built at the kernel level. It’s what lets NexaSDK achieve Day-0 support for new model architectures (LLM. VLM, CV, Embedding, Rerank, ASR, TTS). NexaML supports 3 model formats: GGUF, MLX, and Nexa AI's own .nexa format.