Google EditBench

EditBench is a systematic benchmark for text-guided image inpainting. EditBench evaluates inpainting edits on natural and generated images exploring objects, attributes, and scenes. Through extensive human evaluation on EditBench, we find that object masking during training...

Cost / License

- Free

- Proprietary

Platforms

- Windows

Google EditBench

Features

- AI-Powered

Tags

- inpainting

- ai-generated-images

Google EditBench News & Activities

Recent News

- Maoholguin published news article about Google EditBench

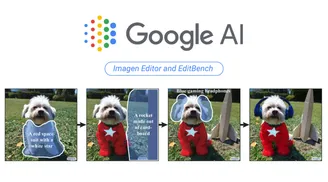

Google unveils Imagen Editor & EditBench: AI-Powered text-based image editing tools with localized capabilities

Google unveils Imagen Editor & EditBench: AI-Powered text-based image editing tools with localized capabilitiesGoogle has recently unveiled Imagen Editor, an innovative research diffusion-based model text-based...

Recent activities

Google EditBench information

What is Google EditBench?

EditBench is a systematic benchmark for text-guided image inpainting. EditBench evaluates inpainting edits on natural and generated images exploring objects, attributes, and scenes. Through extensive human evaluation on EditBench, we find that object masking during training leads to across-the-board improvements in text-image alignment and, as a cohort, these models are better at object-rendering than text-rendering, and handle material/color/size attributes better than count/shape attributes.

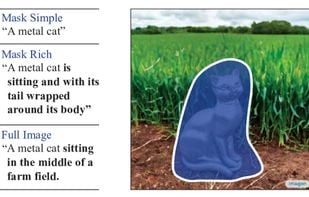

The EditBench dataset for text-guided image inpainting evaluation contains 240 images, with 120 generated and 120 natural images. Generated images are synthesized by Parti and natural images are drawn from the Visual Genome and Open Images datasets. EditBench captures a wide variety of language, image types, and levels of text prompt specificity (i.e., simple, rich, and full captions). Each example consists of (1) a masked input image, (2) an input text prompt, and (3) a high-quality output image used as reference for automatic metrics. To provide insight into the relative strengths and weaknesses of different models, EditBench prompts are designed to test fine-grained details along three categories: (1) attributes (e.g., material, color, shape, size, count); (2) object types (e.g., common, rare, text rendering); and (3) scenes (e.g., indoor, outdoor, realistic, or paintings). To understand how different specifications of prompts affect model performance, we provide three text prompt types: a single-attribute (Mask Simple) or a multi-attribute description of the masked object (Mask Rich) – or an entire image description (Full Image). Mask Rich, especially, probes the models’ ability to handle complex attribute binding and inclusion.