Fireworks AI

Harness state-of-the-art open-source LLMs and image models at blazing speeds with Fireworks AI. Utilize rapid deployment, fine-tuning without extra costs, FireAttention for model efficiency, and FireFunction for complex AI applications including automation and domain-expert copilots.

Cost / License

- Paid

- Proprietary

Platforms

- Online

Features

Tags

Fireworks AI News & Activities

Recent News

- POX published news article about Fireworks AI

Fireworks AI launches f1, a new compound AI model specialized in complex reasoning tasks

Fireworks AI launches f1, a new compound AI model specialized in complex reasoning tasksFireworks AI, a platform to build, deploy, and fine-tune generative AI models, has unveiled Firewor...

Recent activities

HappyGamerGoose added Fireworks AI as alternative to Minimax Platform

HappyGamerGoose added Fireworks AI as alternative to Minimax Platform HappyGamerGoose added Fireworks AI as alternative to EZmodel

HappyGamerGoose added Fireworks AI as alternative to EZmodel HappyGamerGoose added Fireworks AI as alternative to AIHubMix

HappyGamerGoose added Fireworks AI as alternative to AIHubMix- HappyGamerGoose added Fireworks AI as alternative to OpenAI Platform

- HappyGamerGoose added Fireworks AI as alternative to DeepSeek Platform

HappyGamerGoose added Fireworks AI as alternative to Awan LLM

HappyGamerGoose added Fireworks AI as alternative to Awan LLM HappyGamerGoose added Fireworks AI as alternative to Groq

HappyGamerGoose added Fireworks AI as alternative to Groq HappyGamerGoose added Fireworks AI as alternative to Cerebras

HappyGamerGoose added Fireworks AI as alternative to Cerebras- HappyGamerGoose updated Fireworks AI

POX added Fireworks AI as alternative to Cactus

POX added Fireworks AI as alternative to Cactus

Fireworks AI information

What is Fireworks AI?

Use state-of-the-art, open-source LLMs and image models at blazing fast speed, or fine-tune and deploy your own at no additional cost with Fireworks AI.

- The fastest and most efficient inference engine to build production-ready, compound AI systems.

- Bridge the gap between prototype and production to unlock real value from generative AI.

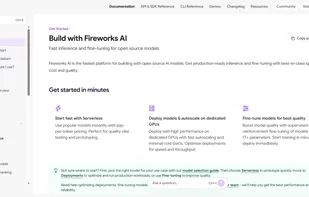

Fastest platform to build and deploy generative AI

Start with the fastest model APIs, boost performance with cost-efficient customization, and evolve to compound AI systems to build powerful applications.

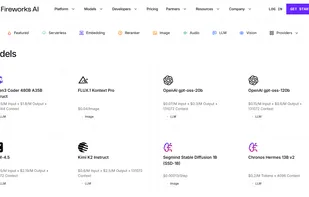

Instantly run popular and specialized models, including Llama3, Mixtral, and Stable Diffusion, optimized for peak latency, throughput, and context length. FireAttention, our custom CUDA kernel, serves models four times faster than vLLM without compromising quality.

- Disaggregated serving

- Semantic caching

- Speculative decoding

Fine-tune with our LoRA-based service, twice as cost-efficient as other providers. Instantly deploy and switch between up to 100 fine-tuned models to experiment without extra costs. Serve models at blazing-fast speeds of up to 300 tokens per second on our serverless inference platform.

- Supervised fine-tuning

- Self-tune

- Cross-model batching

Handle tasks with multiple models, modalities, and external APIs and data instead of relying on a single model. Use FireFunction, a SOTA function calling model, to compose compound AI systems for RAG, search, and domain-expert copilots for automation, code, math, medicine, and more.

- Open-weight model

- Orchestration and execution

- Schema-based constrained generation