Corpus2GPT

Corpus2GPT: A project enabling users to train their own GPT models on diverse datasets, including local languages and various corpus types, using Keras and compatible with TensorFlow, PyTorch, or JAX backends for subsequent storage or sharing.

Features

Properties

- Lightweight

Features

- No registration required

- Works Offline

Tags

- python3

- pytorch

- LLM Training

- llm-inference

- tensorflow

- jax

- attention-mechanism

- keras

Corpus2GPT News & Activities

Recent activities

Corpus2GPT information

What is Corpus2GPT?

Corpus2GPT revolutionizes language model research with its unique approach, offering a user-friendly platform that prioritizes accessibility and ease of use. Unlike other existing tools with complex and cumbersome codebases, Corpus2GPT stands out for its modular design, making it effortless to navigate, modify, and understand. With comprehensive documentation and support for various language corpora, backends, and scaling solutions, it caters to a diverse range of users, from seasoned researchers to industry professionals and enthusiasts alike. Experience the future of language model exploration with Corpus2GPT—where simplicity meets innovation.

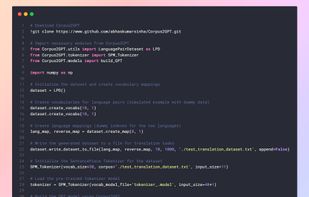

Corpus2GPT is a pioneering project designed to empower users in training their own GPT models using diverse datasets, including those in local languages and various corpus types. Compatible with Keras and seamlessly supporting TensorFlow, PyTorch, or JAX backends, it stands out as one of the first tools in the field to offer this trifecta of backend options, facilitating benchmarking and flexibility for users. Beyond its initial capabilities, Corpus2GPT aspires to evolve into a comprehensive hub of language model tools, incorporating features like RAG (Retrieval-Augmented Generation) and MoEs (Mixture of Experts) in the future. With a commitment to staying at the forefront of LLM (Large Language Model) advancements, Corpus2GPT aims to become the go-to suite for both beginners and seasoned practitioners, offering accessible presets and modules for building cutting-edge language models.

Current Features: Classical Multihead Attention: Corpus2GPT currently supports classical multihead attention mechanism, a key component in transformer architectures, aiding in capturing dependencies across different positions in the input sequences. Decoder: The tool includes a decoder module, essential for generating output sequences in autoregressive language models like GPT. Random Sampling Search Strategies: Corpus2GPT implements random sampling search strategies, enabling users to generate diverse outputs during model inference. Multiple Language Support: With built-in support for multiple languages, Corpus2GPT facilitates training language models on diverse linguistic datasets, fostering inclusivity and accessibility. Sentence Piece Tokenizer (and Vectorizer): Leveraging Sentence Piece Tokenizer and Vectorizer, Corpus2GPT offers efficient tokenization and vectorization of input data, crucial for preprocessing textual data in various languages and domains. GPT Builder: Corpus2GPT provides a streamlined interface for building GPT models, simplifying the process of configuring and training custom language models. Distributed Training Utilities: Tools to perform distributed learning at ease for JAX and TensorFlow backends (includes support for CPU, GPU and TPU).