VESSL

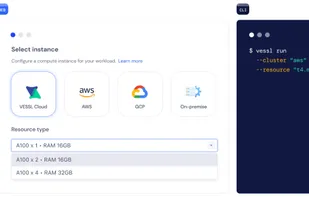

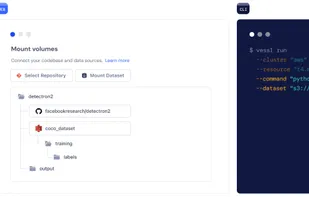

VESSL is an end-to-end ML/ MLOps platform that enables machine learning engineers (MLEs) to customize and execute scalable training, optimization, and inference tasks in seconds. These individual tasks can then be pipelined using our workflow manager for CI/CD.

Cost / License

- Free Personal

- Proprietary

Platforms

- Online

- Software as a Service (SaaS)

VESSL

Features

Tags

VESSL News & Activities

Recent activities

VESSL information

What is VESSL ?

VESSL is an end-to-end ML/ MLOps platform that enables machine learning engineers (MLEs) to customize and execute scalable training, optimization, and inference tasks in seconds. These individual tasks can then be pipelined using our workflow manager for CI/CD. We abstract the complex compute backends required to manage ML infrastructures and pipelines into an easy-to-use web interface and CLI, and thereby fasten the turnaround in training to deployment.

Building, training, and deploying production machine learning models rely on complex compute backends and system details. This forces data scientists and ML researchers to spend most of their time battling engineering challenges and obscure infrastructure instead of leveraging their core competencies – developing state-of-the-art model architectures.

Existing solutions like Kubeflow and Ray are still too low-level and require months of a complex setup by a dedicated system engineering team. Top ML teams at Uber, Deepmind, and Netflix have a dedicated team of MLOps engineers and an internal ML platform. However, most ML practitioners, even those at large SW companies like Yahoo, still rely on scrappy scripts and unmaintained YAML files and waste hours just to set up a dev environment.

VESSL helps companies of any size and industry adopt scalable ML/ MLOps practices instantly. By eliminating the overheads in ML systems with VESSL, companies like Hyundai Motors, Samsung, and Cognex are productionizing end-to-end machine learning pipelines within a few hours.