OpenETL

OpenETL is a robust and scalable ETL (Extract, Transform, Load) application built with modern technologies like FastAPI, Next.js, and Apache Spark. The application offers an intuitive, user-friendly interface that simplifies the ETL process, empowering users to effortlessly...

Cost / License

- Freemium (Subscription)

- Open Source (Apache-2.0)

Platforms

- Software as a Service (SaaS)

- Self-Hosted

- Docker

OpenETL

Features

- Data visualization

Tags

- etl

- big-data

OpenETL News & Activities

Recent activities

OpenETL information

What is OpenETL?

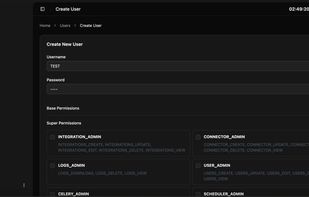

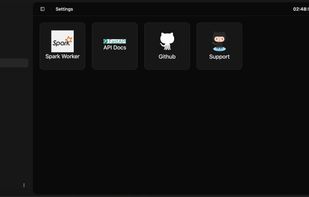

OpenETL is a robust and scalable ETL (Extract, Transform, Load) application built with modern technologies like FastAPI, Next.js, and Apache Spark. The application offers an intuitive, user-friendly interface that simplifies the ETL process, empowering users to effortlessly extract data from various sources, apply transformations, and load it into your desired target destinations.

Key Features of OpenETL:

- Backend: Powered by Python 3.12 and FastAPI, ensuring fast and efficient data processing and API interactions.

- Frontend: Built with Next.js, providing a smooth and interactive user experience.

- Compute Engine: Apache Spark is integrated for distributed data processing, enabling scalable and high-performance operations.

- Task Execution: Utilizes Celery to handle background task processing, ensuring reliable execution of long-running operations.

- Scheduling: APScheduler is used to manage and schedule ETL jobs, allowing for automated workflows.

Features

- ETL with Full Load: Easily extract data from different sources and load it into your preferred target location.

- Scheduled Timing: Schedule your ETL tasks to run at specific intervals, ensuring your data is always up-to-date.

- User Interface: A clean and user-friendly UI to monitor and control your ETL processes with ease.

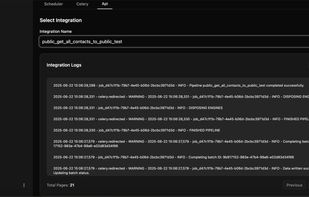

- Logging: Comprehensive logging to track every action, error, and data transformation throughout the ETL pipeline.

- Integration History: Keep track of all your integration jobs with detailed records of past runs, including statuses and errors.

- Batch Processing: Handle large volumes of data by processing it in batches for better efficiency.

- Distributed Spark Computing: Utilize Spark for distributed computing, allowing you to process large datasets efficiently across multiple nodes.

Benchmark Check the detailed performance benchmark of OpenETL here: https://cdn.dataomnisolutions.com/main/app/benchmark.html