nanoGPT

The simplest, fastest repository for training/finetuning medium-sized GPTs. It is a rewrite of minGPT that prioritizes teeth over education. Still under active development, but currently the file train.

Cost / License

- Free

- Open Source (MIT)

Platforms

- Python

- Mac

- Windows

- Linux

- BSD

Features

- Python-based

- AI-Powered

nanoGPT News & Activities

Recent activities

- Danilo_Venom added nanoGPT as alternative to Cloudflare Workers AI

- POX added nanoGPT as alternative to Together AI

nanoGPT information

What is nanoGPT?

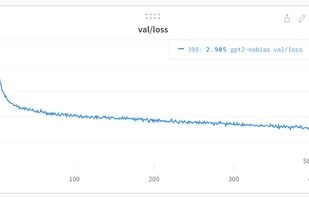

The simplest, fastest repository for training/finetuning medium-sized GPTs. It is a rewrite of minGPT that prioritizes teeth over education. Still under active development, but currently the file train.py reproduces GPT-2 (124M) on OpenWebText, running on a single 8XA100 40GB node in about 4 days of training. The code itself is plain and readable: train.py is a ~300-line boilerplate training loop and model.py a ~300-line GPT model definition, which can optionally load the GPT-2 weights from OpenAI. That's it.

Because the code is so simple, it is very easy to hack to your needs, train new models from scratch, or finetune pretrained checkpoints (e.g. biggest one currently available as a starting point would be the GPT-2 1.3B model from OpenAI).