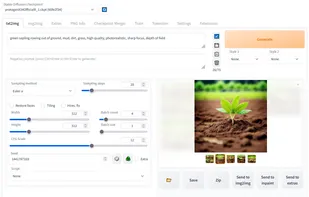

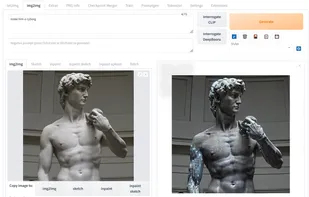

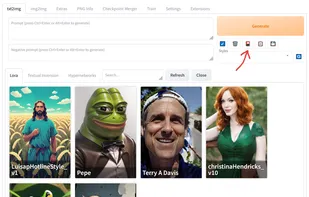

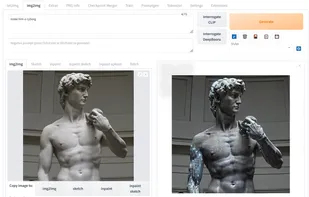

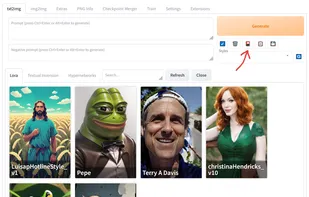

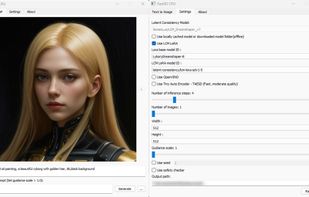

AUTOMATIC1111's Stable Diffusion web UI provides a powerful, web interface for Stable Diffusion featuring a one-click installer, advanced inpainting, outpainting and upscaling capabilities, built-in color sketching and much more.

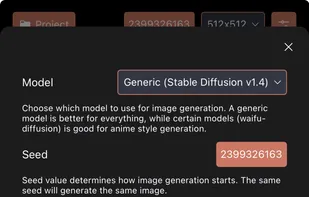

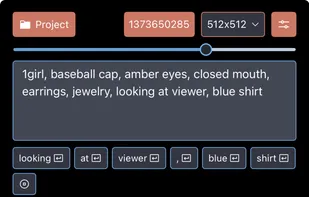

Local Dream is described as 'Run Stable Diffusion on Android Devices with Snapdragon NPU acceleration. Also supports CPU/GPU inference' and is an app. There are eight alternatives to Local Dream for a variety of platforms, including Windows, Linux, Mac, Android and iPhone apps. The best Local Dream alternative is A1111 Stable Diffusion WEB UI, which is both free and Open Source. Other great apps like Local Dream are FastSD CPU, Neural Pixel, AmuseAI and Local Diffusion.

AUTOMATIC1111's Stable Diffusion web UI provides a powerful, web interface for Stable Diffusion featuring a one-click installer, advanced inpainting, outpainting and upscaling capabilities, built-in color sketching and much more.

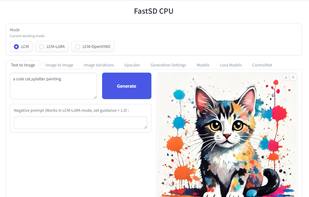

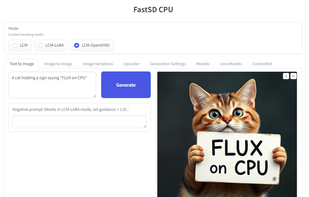

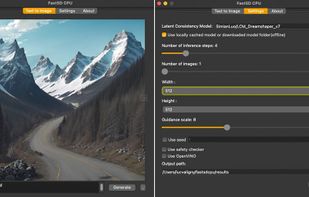

FastSD CPU is a faster version of Stable Diffusion on CPU. Based on Latent Consistency Models and Adversarial Diffusion Distillation.

With Neural Pixel, you can use Stable Diffusion on practically any GPU that supports Vulkan and has at least 3GB of VRAM. This is a simple way to generate your images without having to deal with CUDA/ROCm installations or hundreds of Python dependencies.

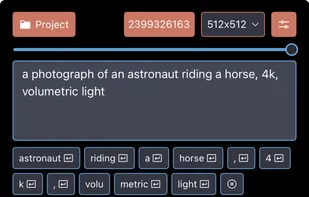

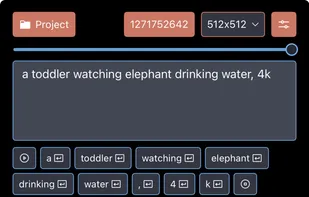

Draw Things provides a comprehensive but still easy-to-use, mobile and desktop solution for AI-based art generation. It packs all the power of Stable Diffusion into a sleek, iOS and Mac app that lets you create, upscale and edit AI art, totally offline, free and privacy safe.

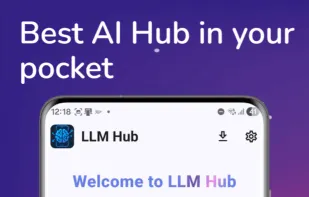

LLM Hub is an open-source Android app for on-device LLM chat and image generation. It's optimized for mobile usage (CPU/GPU/NPU acceleration) and supports multiple model formats so you can run powerful models locally and privately.

Mine StableDiffusion is a native, offline-first AI art generation app that brings the power of Stable Diffusion models to your fingertips. Built with modern Kotlin Multiplatform technology and powered by the blazing-fast stable-diffusion.