LM Studio

41 likes

Discover, download, and run local LLMs.

Cost / License

- Free

- Proprietary

Application types

Platforms

- Mac

- Windows

- Linux

Features

- Ad-free

- Works Offline

- No registration required

- No Tracking

- Dark Mode

- AI Chatbot

- No Coding Required

LM Studio News & Activities

Highlights All activities

Recent News

- POX published news article about LM Studio

LM Studio 0.4 adds parallel model requests, server-native daemon and new stateful REST API

LM Studio 0.4 adds parallel model requests, server-native daemon and new stateful REST APILM Studio 0.4 has been released, expanding its platform for discovering and running local open larg...

- Maoholguin published news article about LM Studio

LM Studio is now free for both personal and commercial use at work!

LM Studio is now free for both personal and commercial use at work!LM Studio is now free to use in professional settings, removing the need for separate licenses or p...

- Fla published news article about LM Studio

LM Studio 0.3.17 debuts Model Context Protocol support and more

LM Studio 0.3.17 debuts Model Context Protocol support and moreLM Studio 0.3.17 now supports the Model Context Protocol, enabling connections to both local and re...

Recent activities

Zack_indie added LM Studio as alternative to OpenClaw Launch

Zack_indie added LM Studio as alternative to OpenClaw Launch POX added LM Studio as alternative to QVAC Workbench

POX added LM Studio as alternative to QVAC Workbench bugmenot added LM Studio as alternative to Nexa Studio

bugmenot added LM Studio as alternative to Nexa Studio bugmenot added LM Studio as alternative to AI00 RWKV Server

bugmenot added LM Studio as alternative to AI00 RWKV Server bugmenot added LM Studio as alternative to RWKV Runner

bugmenot added LM Studio as alternative to RWKV Runner

Featured in Lists

Local AI is a curated list of software that lets you run and use AI directly on your own computer — without relying on …

List by faceinvader with 9 apps, updated

What is LM Studio?

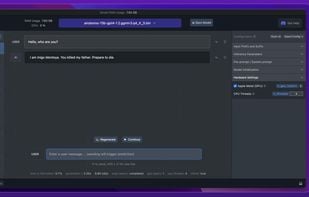

With LM Studio, you can ... 🤖 - Run LLMs on your laptop, entirely offline 👾 - Use models through the in-app Chat UI or an OpenAI compatible local server 📂 - Download any compatible model files from HuggingFace 🤗 repositories 🔭 - Discover new & noteworthy LLMs in the app's home page

Comments and Reviews

Easy to use, know if the model could be run on our computer at a glance. Also, easy to deploy a local server. What else ?

It's very suitable for beginners, easy to use, and has a clean and intuitive interface. Updating the program itself and its dependencies (like llama.cpp, CUDA, etc.) is also quite straightforward. It supports GPU acceleration, and you can easily configure the model during loading. However, it lacks a 'Chat with Documents' feature, and therefore does not support capabilities like RAG and embedding. Plus not open source.

I would give it a 5 if it wasn't for an update that removed the option to select the chats folder, but for everything else have no complaint whatsoever, but just for that detail, I'm thinking about switching to another software alike

Quite literally the only GPU & CPU inferencing app self host that isn't a lie or complete paywall bs. Intergrates with Huggingface to find your preferred ggufs, Why can't other people be like this. Thanks LM Studio for the only no BS app to run LLMs.