Janus

Advanced autoregressive models for unified multimodal understanding and generation, featuring visual path decoupling, optimized training strategy, and rectified flow integration for superior and flexible task performance.

Features

- Text to Image Generation

- AI-Powered

- Python-based

Janus News & Activities

Recent News

Recent activities

- POX added Janus as alternative to Mine StableDiffusion

- Vangardh liked Janus

mockit added Janus as alternative to Mock It AI

mockit added Janus as alternative to Mock It AI- Tetramatrix liked Janus

POX added Janus as alternative to Qwen Image

POX added Janus as alternative to Qwen Image

Janus information

What is Janus?

Unified multimodal understanding and generation models.

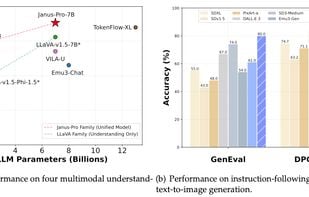

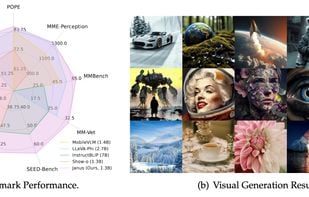

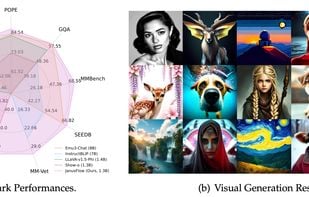

Janus is a novel autoregressive framework that unifies multimodal understanding and generation. It addresses the limitations of previous approaches by decoupling visual encoding into separate pathways, while still utilizing a single, unified transformer architecture for processing. The decoupling not only alleviates the conflict between the visual encoder’s roles in understanding and generation, but also enhances the framework’s flexibility. Janus surpasses previous unified model and matches or exceeds the performance of task-specific models. The simplicity, high flexibility, and effectiveness of Janus make it a strong candidate for next-generation unified multimodal models.

JanusFlow introduces a minimalist architecture that integrates autoregressive language models with rectified flow, a state-of-the-art method in generative modeling. Our key finding demonstrates that rectified flow can be straightforwardly trained within the large language model framework, eliminating the need for complex architectural modifications. Extensive experiments show that JanusFlow achieves comparable or superior performance to specialized models in their respective domains, while significantly outperforming existing unified approaches across standard benchmarks. This work represents a step toward more efficient and versatile vision-language models.

Janus-Pro is an advanced version of the previous work Janus. Specifically, Janus-Pro incorporates an optimized training strategy, expanded training data, and scaling to larger model size. With these improvements, Janus-Pro achieves significant advancements in both multimodal understanding and text-to-image instruction-following capabilities, while also enhancing the stability of text-to-image generation.

Comments and Reviews

Janus is a powerful and flexible AI model that delivers impressive multimodal generation and understanding. I appreciate its open-source nature and innovative approach to text-to-image tasks.

Janus is significantly easier to use than Stable Diffusion, and is FOSS. I've switched most of my projects to it since it's both more powerful and less compute intensive.