get-set, Fetch!

Nodejs web scraper. Use it from your own code, via command line or docker container. Supports multiple storage options: SQLite, MySQL, PostgreSQL. Supports multiple browser or dom-like clients: Puppeteer, Playwright, Cheerio, JSdom.

Features

- Command line interface

Support for Docker

get-set, Fetch! News & Activities

Recent activities

get-set, Fetch! information

What is get-set, Fetch!?

get-set, Fetch! is a plugin based, nodejs web scraper. It scrapes, stores and exports data. At its core, an ordered list of plugins (default or custom defined) is executed against each to be scraped web resource.

Supports multiple storage options: SQLite, MySQL, PostgreSQL. Supports multiple browser or dom-like clients: Puppeteer, Playwright, Cheerio, Jsdom.

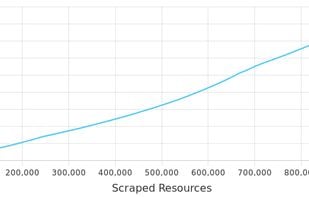

For quick, small projects under 10K URLs storing the queue and scraped content under SQLite is fine. For anything larger use PostgreSQL. You will be able to start/stop/resume the scraping process across multiple scraper instances each with its own IP and/or dedicated proxies. Using PostgreSQL it takes 90 minutes to scrape 1 million URLs with a concurrency of 100 parallel scrape actions. That's 5.5ms per scraped URL.