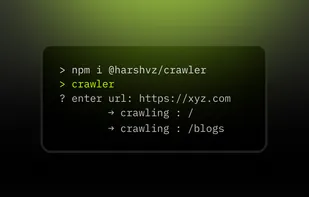

@harshvz/crawler

A flexible web crawler and scraping tool using Playwright, supporting both BFS and DFS crawling strategies with screenshot capture and structured output. Installable via npm and usable both as a CLI and programmatically.

Cost / License

- Free

- Open Source (Apache-2.0)

Platforms

- Mac

- Windows

- Linux

Features

- Browser Automation

- Crawler

Tags

- graph-traversal

- internal-links

- npm-package

- package

- screenshot-automation

- headless-browser

- playwright

- web-crawling

- typescript

- meta-data-extraction

- bfs

- dom-parsing

- dfs

- seo-analysis

- cli-tool

@harshvz/crawler News & Activities

Recent activities

- niksavc liked @harshvz/crawler

- harshvz updated @harshvz/crawler

- harshvz liked @harshvz/crawler

- harshvz added @harshvz/crawler

harshvz added @harshvz/crawler as alternative to Scrapy, Apify, Scrapfly and UI.Vision RPA

harshvz added @harshvz/crawler as alternative to Scrapy, Apify, Scrapfly and UI.Vision RPA

@harshvz/crawler information

What is @harshvz/crawler?

crawler is a Playwright-based web crawler designed to turn websites into reusable knowledge artifacts.

Unlike traditional scrapers that focus on extracting isolated data fields, this tool focuses on capturing meaning-bearing content from real, JavaScript-rendered pages and preserving it in a form suitable for documentation, internal knowledge bases, and AI/LLM workflows.

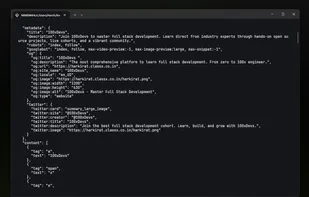

The crawler navigates websites using BFS or DFS strategies, renders each page in a real browser, and extracts core semantic elements such as metadata, headings (H1–H6), paragraphs, and inline text. The extracted content is stored as Markdown files, alongside full-page screenshots, providing both textual knowledge and visual ground truth for every crawled page.

The project is intentionally opinionated and minimal:

It prioritizes content understanding over raw scraping speed

It captures human-readable, context-preserving text

It produces outputs that are immediately usable by humans and machines

At its core, crawler is built as a knowledge ingestion layer — a foundation for turning websites into structured documentation, searchable knowledge bases, or LLM-ready corpora, while remaining fully local, open-source, and developer-controlled.

As the project evolves, the focus is on making extraction more controllable and deterministic, allowing users to define what content is captured and how it is organized — without introducing black-box behavior or external dependencies.