Opera adds support for 150 built-in local Large Language Models in latest AI feature drop

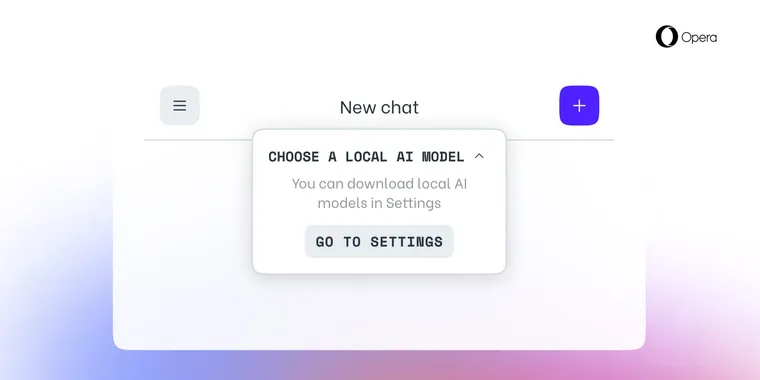

Opera has just announced the addition of experimental support for 150 local Large Language Model (LLM) variants from approximately 50 model families to their web browser. This update is part of Opera's AI Feature Drops program and is initially available to Opera One users receiving developer stream updates.

Opera's announcement highlights that “This marks the first time local LLMs can be easily accessed and managed from a major browser through a built-in feature.” The supported models include Llama from Meta, Vicuna, Gemma from Google, Mixtral from Mistral AI, among other LLM families.

The use of local Large Language Models ensures that users' data remains on their device, enabling AI usage without the necessity to transfer information to a server. However, it's important to note that each LLM requires between 2-10 GB of local storage space. Also, local LLMs may provide output slower than server-based models due to dependence on the hardware's computing capabilities.

Once an LLM is downloaded, it replaces Aria, Opera's native browser AI, until a new chat with Aria is initiated or Aria is switched back on.

To test this feature, users can install or update their Opera One Developer web browser and follow the steps outlined in the announcement post.